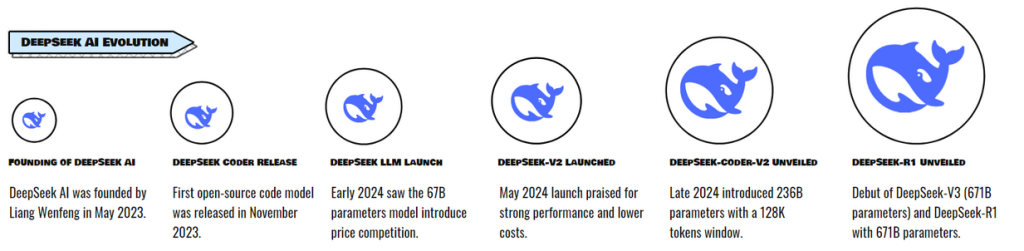

If you are a fan of AI technology, you must have surely come across the term ‘DeepSeek’ recently. Originating from China, this model has gained traction from all over the world. It was released on January 20, 2025 by Liang Wenfeng, founder of DeepSeek. At present, the DeepSeek model is free. It is available on Apple’s App Store and as a web application.

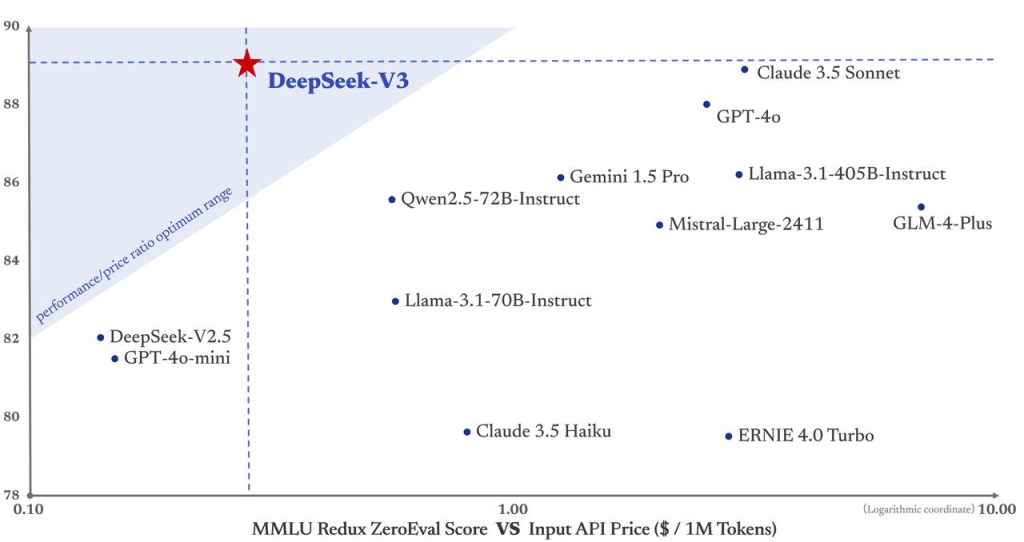

DeepSeek has surpassed the level of other popular AI platforms such as OpenAI, Claude, Perplexity and Gemini. The organization has also reported that the model development took just 2 months and the budget was only $6 billion. This is just the 3%-5% cost of OpenAI building their next model. Sounds interesting right?

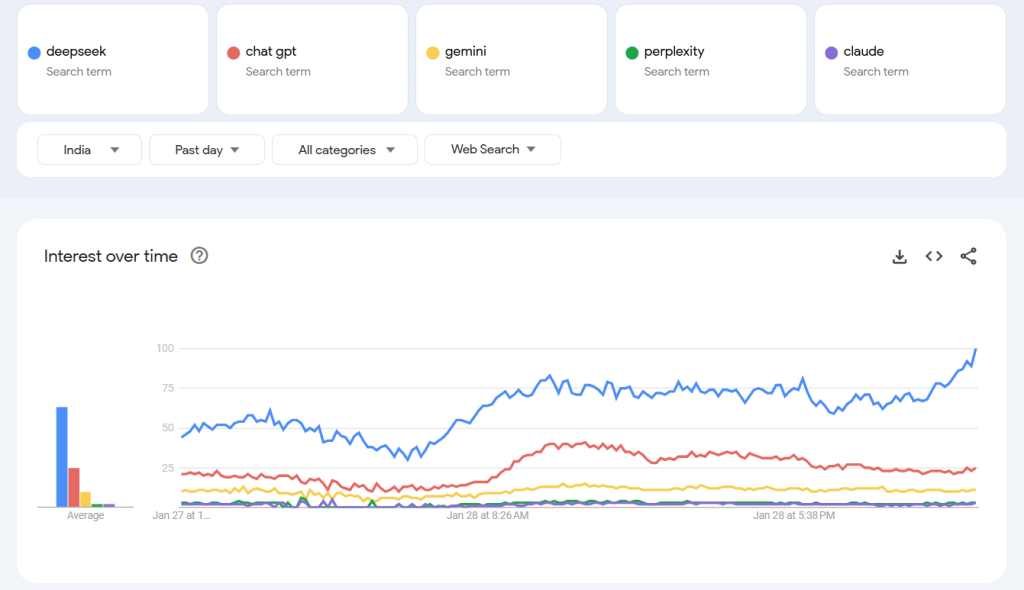

Google Trends charts are jaw-dropping. ChatGPT, Gemini, Perplexity & Claude are barely in the frame.

As a technology enthusiast, we know that you are looking to explore more on the model. So, we have come up with a detailed article on DeepSeek’s technical overview, benchmarks, tests with different scenarios, comparisons, limitations and much more!

DeepSeek – A Technical Overview

DeepSeek is an advanced AI model designed for reasoning tasks and explainable AI. Unlike many models, DeepSeek provides both the output and the thought process behind it. This feature makes it a key player in advancing model interpretability and building trust in AI systems. It focuses on delivering reasoning-driven outputs, setting a new standard for transparency.

DeepSeek is fully open source, offering unlimited and free access to its models and tools. Its distilled models, smaller yet efficient versions, ensure accessibility without sacrificing performance. The model excels in context matching, adapting responses to user inputs with high precision.

Its API supports tasks requiring logic, coherence, and fluency. It employs frameworks like GRPO for reinforcement learning, Mixture of Experts (MoE) for efficient parameter scaling, and Chain of Thought (CoT) for step-by-step reasoning.

NVIDIA called DeepSeek’s R1 model “an excellent AI advancement.” However, the launch of this Chinese startup’s model led to a 17% drop in NVIDIA’s stock in recent times.

DeepSeek V3

DeepSeek-V3 serves as the foundational model for all subsequent iterations, including DeepSeek-R1 and R0. It features massive parameter scaling with 671 billion Mixture of Experts (MoE) parameters and 37 billion activated parameters. Trained on 14.8 trillion high-quality tokens, it is designed to support advanced reasoning capabilities and multitask performance across a wide range of applications.

Key Features:

- Parameter Scaling: 671B MoE parameters, 37B activated.

- Training Data: 14.8 trillion tokens of high quality.

- Applications: Advanced reasoning and multitasking performance

DeepSeek R0

DeepSeek-R1-Zero represents a bold experiment in AI training. It utilizes pure reinforcement learning without labeled data. This approach eliminates the dependence on supervised datasets, instead relying on trial-and-error learning to develop reasoning capabilities.

Training Approach:

- Used the GRPO RL framework, bypassing traditional critic models.

- Predefined rules such as coherence, fluency, and completeness guided the scoring process.

- For tasks like mathematics, the model was rewarded for outputs adhering to logical consistency.

Advantages:

- Demonstrated autonomous evolution of reasoning models without labeled data.

- Removed costly labeling bottlenecks for faster and more scalable development in the long run.

Challenges:

- Struggled with readability and language mixing due to the absence of structured training data.

Deepseek R1

To overcome the limitations of R1-Zero, DeepSeek-R1 employed a multi-stage training strategy:

- Fine-Tuning: Cold-start data was used to establish a structured foundation.

- Pure RL Phase: Reinforcement learning was applied to enhance reasoning skills.

- Rejection Sampling: The model generated synthetic data from its RL outputs, selecting the best examples for further training.

- Merging Data: Combined synthetic data with supervised data from DeepSeek-V3-Base, enriching domain-specific knowledge.

- Final RL Training: Conducted across diverse prompts to ensure generalization.

🚀 DeepSeek-R1 is here!

— DeepSeek (@deepseek_ai) January 20, 2025

⚡ Performance on par with OpenAI-o1

📖 Fully open-source model & technical report

🏆 MIT licensed: Distill & commercialize freely!

🌐 Website & API are live now! Try DeepThink at https://t.co/v1TFy7LHNy today!

🐋 1/n pic.twitter.com/7BlpWAPu6y

This approach addressed the readability and language mixing issues from R1-Zero while progressively enhancing the model’s performance. Each stage is built upon the previous one, ensuring structured, high-quality outputs. It combines synthetic and supervised learning methods to produce well-structured, high-performing outputs across diverse use cases making it a valuable asset for custom enterprise software development.

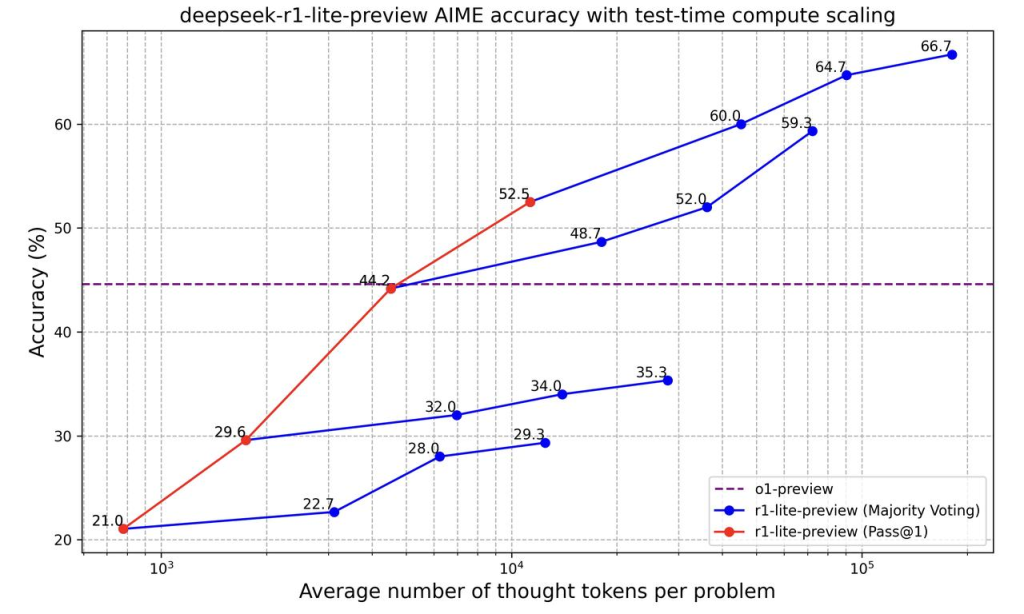

DeepSeek R1-Lite-Preview

DeepSeek-R1-Lite-Preview is an optimized and lighter version of R1. It is designed to prioritize efficiency and accessibility. It maintains the core reasoning capabilities of R1 while being optimized for tasks requiring fast and transparent decision-making.

Features:

- Simplified architecture focused on inference speed.

- Transparent, step-by-step reasoning capabilities.

- Efficient for diverse real-world use cases.

Applications: This model is ideal for organizations and researchers needing a high-performance reasoning model with a lower resource footprint. It ensures robust decision-making while being accessible to broader audiences.

Comparison Table of DeepSeek Models

Below is an easy-to-refer comparison table on different DeepSeek models released so far:

| Model | Parameters | Training Approach | Key Details | Challenges | Release Date |

| DeepSeek-V3 | 671B (MoE), 37B activated | Supervised Learning | Strong baseline for scalable learning | None | 2024 (Q2) |

| DeepSeek-R1-Zero | Derived from V3 | Pure RL (GRPO Framework) | Eliminated labeled data dependence | Readability, language mixing | 2024 (Q3) |

| DeepSeek-R1 | Enhanced V3 | Multi-Stage (Cold Start, Pure RL, Rejection Sampling, SFT) | Resolved R0 issues with structured learning | None | 2024 (Q4) |

| DeepSeek-R1-Lite-Preview | Simplified R1 | Optimized Inference | Accessible, efficient reasoning | None | 2025 (Q1) |

Why is DeepSeek Cost-Efficient?

DeepSeek’s cost efficiency stems from its innovative approach to training models. By eliminating the need for labeled datasets, it avoids the time-consuming and expensive process of data annotation. Instead, it relies on reinforcement learning (RL), which allows models to learn autonomously through trial and error. This approach reduces upfront costs and creates a scalable framework for long-term development.

Another factor is the use of the chain of thought (CoT) technique, which enhances reasoning during both training and inference, optimizing resource usage. Additionally, DeepSeek’s open-source nature significantly lowers costs for developers and organizations, as it allows free access to model weights and outputs, enabling fine-tuning without licensing restrictions. This combination makes DeepSeek an affordable yet powerful solution.

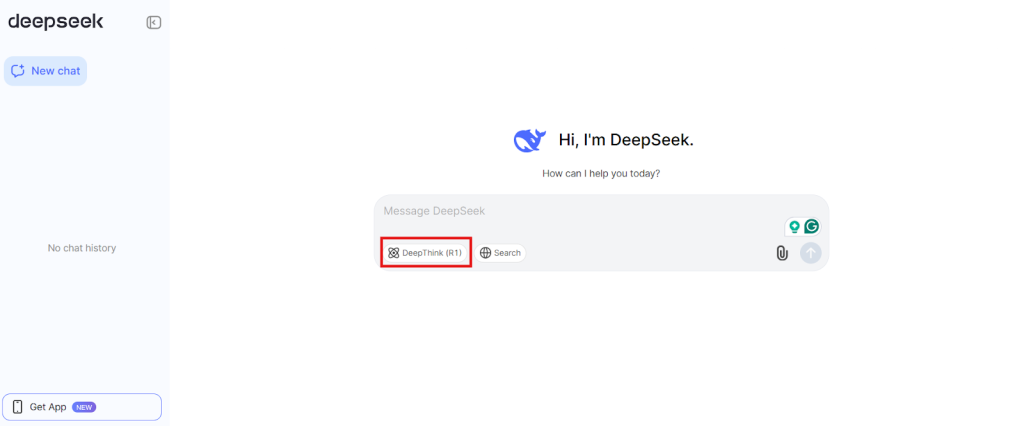

How to Use DeepSeek?

Using the DeepSeek platform is straightforward. Follow these steps to get started:

- Visit the DeepSeek Platform

Go to the official platform DeepSeek or access the API documentation at DeepSeek API. - Sign Up or Log In

Create an account or log in with your existing credentials to access the platform. - Select Your Model

Choose the desired model for your task:- DeepSeek-R1 for advanced reasoning tasks.

- DeepSeek-R1-Lite-Preview for optimized inference.

- Distilled models for lightweight tasks.

- Running the Model Locally: If you wish to run DeepSeek on your local machine, download the necessary files and dependencies from the website. Ensure all prerequisites like Python and required libraries are installed.

- Running Online: For using DeepSeek-R1 online, enable the “Deep Think” option in the interface to activate R1 capabilities.

- Configure Your Inputs

- For the web interface: Enter your query or task in the provided input box.

- For API: Specify the model by setting model=deepseek-reasoner or other relevant configurations.

- Run Your Task

- Submit your inputs and let DeepSeek process them.

- If using the API, configure additional parameters like token limits, output formats, or task-specific rules.

- Analyze Outputs

DeepSeek provides reasoning outputs along with its thought process for explainability. Review both the results and the step-by-step reasoning provided.

Testing DeepSeek R1 on Different Use Cases

We tested a complex mathematics question with DeepSeek. The system took around 3 minutes to process but ultimately failed to provide a response, leaving the output incomplete, as shown in the image below.

In comparison, ChatGPT responded to the same question within seconds. While ChatGPT provided a clear and concise solution, DeepSeek struggled with the complexity, highlighting a significant gap in its ability to handle high-level mathematical reasoning efficiently.

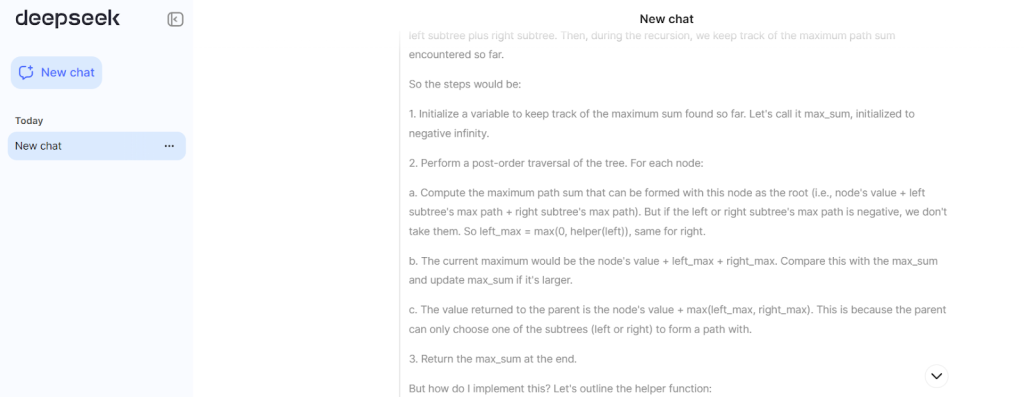

We tested DeepSeek R1 by requesting a coding problem related to Binary Trees to evaluate its capabilities. It provided a 90% accurate response, showcasing its potential for solving complex coding challenges.

However, the processing time was noticeably long, taking around 5 minutes to generate the solution. While the accuracy is commendable, the delay could be a limitation for workflows requiring faster feedback.

Next, we tested DeepSeek R1 for logical reasoning tasks. It processed the request in around 17 seconds and provided a proper and accurate response. The faster processing time for this category was impressive and made it suitable for tasks requiring quick logical analysis.

One feature we found particularly helpful in DeepSeek R1 was the “thoughts” it displayed before printing the output. This added layer of transparency not only made the process more understandable but also provided users with valuable insights into how the solution was derived. It enhanced trust and allowed for better learning and debugging opportunities.

DeepSeek’s Janus Series

Janus is DeepSeek’s open-source multimodal AI model designed for tasks like image understanding and generation. The latest version, Janus-Pro-7B, performs better than models like OpenAI’s DALL-E 3 and Stability AI’s Stable Diffusion in text-to-image generation benchmarks.

You can access the model on the Janus GitHub repository.

Key Features:

- Unified Transformer Architecture: Janus uses a transformer-based structure that separates visual encoding into different pathways, improving its ability to process and generate images.

- Extensive Training Data: The model was trained on over 90 million samples, including 72 million synthetic images and real-world data, ensuring detailed and accurate outputs.

- Open Source: Janus is fully open source, giving developers free access to explore and use the model for various applications.

Benchmarks of DeepSeek

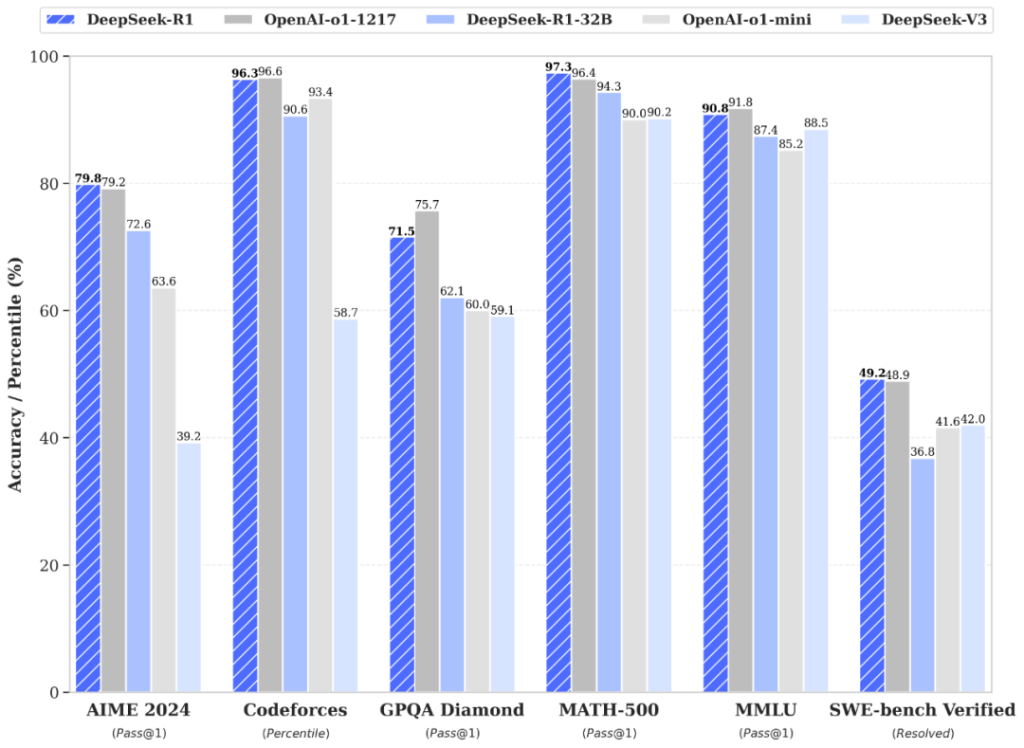

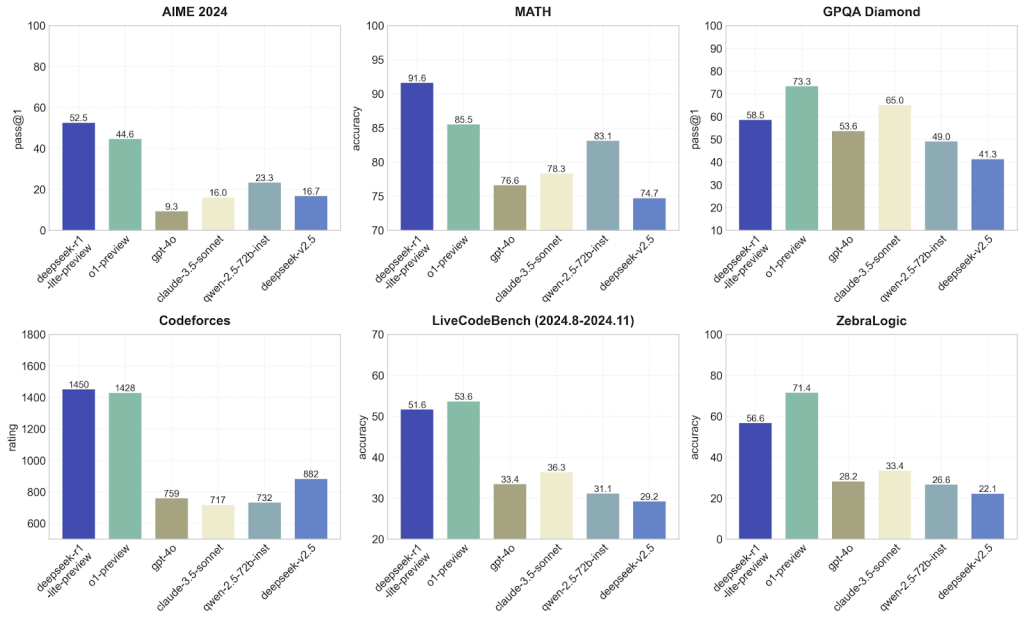

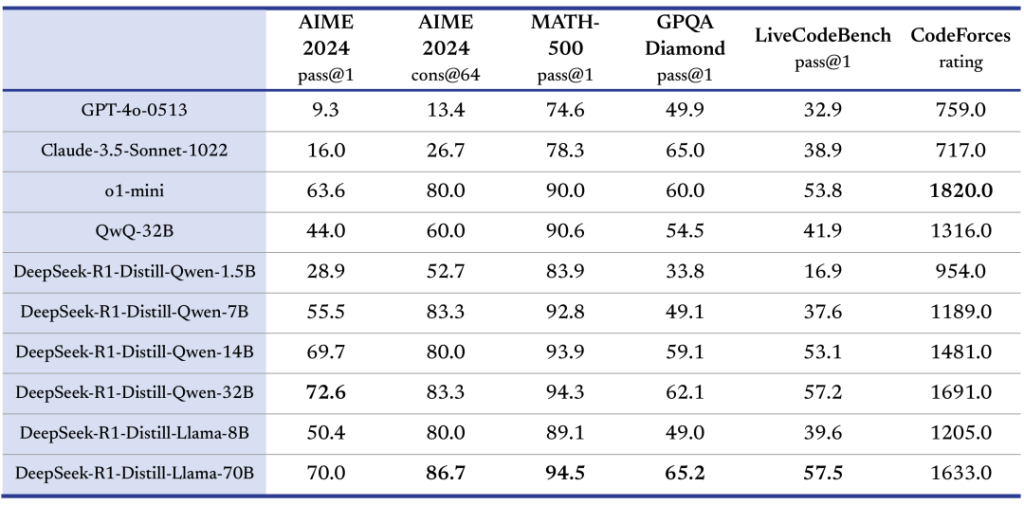

DeepSeek has been rigorously tested across multiple benchmarks, showcasing its strengths in reasoning, mathematics, and logical tasks. Here’s a summary of its performance:

- AIME 2024 (Mathematics): Achieved 86.7% pass@1 with the DeepSeek-R1-Zero model, matching OpenAI-o1-0912.

- Mathematics and Logical Reasoning: Demonstrated transparent thought processes and steady improvements with longer chain-of-thought (CoT) steps.\n

- MATH Benchmark: DeepSeek-R1-Lite-Preview achieved 91.6% accuracy, outperforming OpenAI-o1-preview on similar tasks.

- Reasoning Tasks: Proved capable of producing reasoning-driven outputs across diverse scenarios with superior coherence and fluency metrics.

- Context Matching: Displayed high accuracy in adapting responses to varied prompts, making it suitable for complex tasks.

- Chain-of-Thought Performance: Enhanced step-by-step reasoning during training and inference, reinforcing its capabilities in structured tasks.

- OpenAI-o1 Comparison: Demonstrated comparable or superior performance in specific benchmarks while addressing gaps in transparency and explainability.

Wrapping Up

DeepSeek makes advanced AI accessible to everyone, offering powerful capabilities that are free and easy to use. Whether you’re solving coding challenges, logical reasoning problems, or exploring new possibilities, it delivers reliable results with helpful transparency.

With its focus on providing cutting-edge technology, DeepSeek helps users tackle complex tasks and gain deeper insights. It’s a step forward in making AI tools available for everyone, regardless of expertise.

Start exploring today and see how DeepSeek can simplify your workflows and expand your horizons.